Databricks Brings People,

Data And Technology Together

Let's get started to craft the best fit solution to your data

Why HiFX !

HiFX is a Certified Consulting & System Integrator Partner of Databricks which allows us to leverage their cloud-based platform’s Unified Analytics expertise, solution architects and sales resources to better help our customers.

With Databricks We Make Big Data Simple!

We achieve 100X performance gains, 30% rapid deployment & 20% more stabilization effectivity

Working with dataBricks since 2017

Databricks trained consultants & developers

Create And Find

New Opportunities

The Real Time Streaming and analyzing of Big Data can help companies to uncover hidden patterns, correlations and other insights. Companies can get answers from it almost immediately being able to upsell and cross-sell clients based on what the information presents.

The existence of Real Time Streaming data technology brings the type of predictability that cuts costs, solves problems and grows sales. It has led to the invention of new business models, product innovations and revenue streams.

Unified and simplified architecture across batch and streaming to serve all use cases

Robust data pipelines that ensure data reliability with ACID transaction and data quality guarantees

Reduced compute times and costs with a scalable cloud runtime powered by highly optimized Spark clusters

Elastic cloud resources auto- scale up with workloads and scale down for cost savings

Modern data engineering best practices for improved productivity, system stability and data reliability

Let's get to know Databricks

The only open unified platform for data management, business analytics and machine learning.

Users achieve faster time-to-value with Databricks by creating analytic workflows that go from interactive exploration and ETL through to production data products.

AI applications are simpler to explore and transition to production as the one platform is used by data scientists and data engineers. Users can quickly prepare clean data at massive scale and continuously train and deploy state-of-the-art ML models for best-in-class AI applications.

Databricks makes it easier for its users to focus on their data by providing a fully managed, scalable and secure cloud infrastructure that reduces operational complexity and total cost of ownership.

Data Lakehouses

Now combine the capabilities of Data Lakes and Data Warehouse to enable BI and ML on all data.

Data lakehouses are enabled by a new system design: implementing similar data structures and data management features to those in a data warehouse, directly on the kind of low-cost storage used for data lakes.

Merging them together into a single system means that data teams can move faster as they are able to use data without needing to access multiple systems. Data lakehouses also ensure that teams have the most complete and up to date data available for data science, machine learning and business analytics projects.

A Lakehouse has the following key features:

- Transaction support

- End-to-end streaming

- Openness

- Schema enforcement and governance

- Support for BI

- Storage is decoupled from compute

- Support for diverse workloads

- Support for diverse data types ranging from unstructured to structured data

Delta Lake

Delta Lake brings reliability, performance and lifecycle management to data lakes.

No more malformed data ingestion, difficulty deleting data for compliance, or issues modifying data for change data capture. Accelerate the velocity that high quality data can get into your data lake and the rate that teams can leverage that data, with a secure and scalable cloud service.

Benefits

OPEN & EXTENSIBLE

Data is stored in the open Apache Parquet format, allowing data to be read by any compatible reader. APIs are open and compatible with Apache Spark™.

DATA RELIABILITY

Data lakes often have data quality issues, due to a lack of control over ingested data. Delta Lake adds a storage layer to data lakes to manage data quality, ensuring data lakes contain only high quality data for consumers.

MANAGE DATA LIFECYCLE

Handle changing records and evolving schemas as business requirements change. And go beyond Lambda architecture with truly unified streaming and batch using the same engine, APIs and code.

See the Results for Yourself

Who Uses Databricks

Data Analysts

Monitor machine learning process Develop production machine learning pipelines Explore machine learning models

Data Scientists

Perform data analysis using SQL at scale Explore datasets visually and interactively in a notebook environment

Data Engineers

Develop, test, execute and monitor batch ETL jobs Implement data streaming ingestion or analytics job Collaborate on code,notebook and jobs

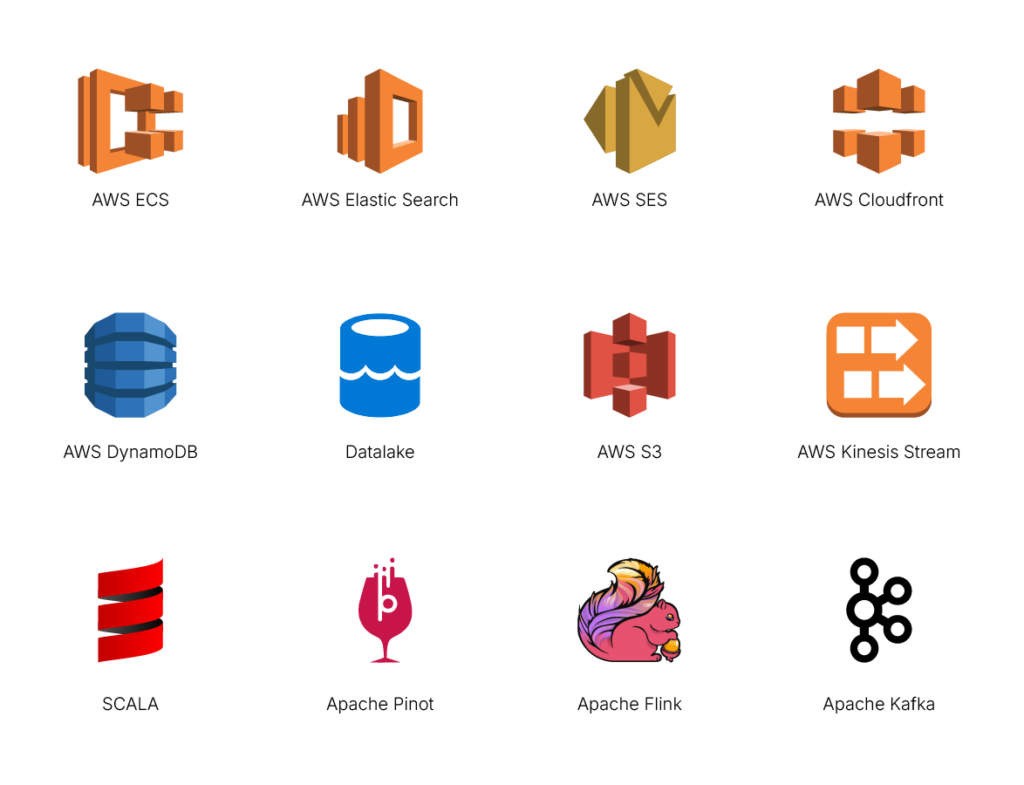

Our Tech Stack

Experience Databricks Live Ready to supercharge your data analytics and ML workflows?

Unleash Your Data’s Full Potential

Join our interactive demo where our experts showcase how Databricks can streamline your data architecture and accelerate innovation – all in one unified platform.

Databricks Workspace

Databricks Runtime

Databricks Cloud

FAQ's

1. How does Databricks integrate with existing data tools and platforms?

Databricks integrates with a wide range of data tools and platforms, including:

- Data storage (e.g., AWS S3, Azure Data Lake, Google Cloud Storage).

- ETL tools (e.g., Informatica, Talend, dbt).

- BI tools (e.g., Tableau, Power BI, Looker).

- Data orchestration frameworks like Apache Airflow.

- Popular data formats (e.g., Parquet, ORC, JSON).

- Native integration with Spark APIs, allowing flexibility with existing big data workflows.

2. What security measures does Databricks implement to protect data?

Databricks offers robust security through multiple layers of protection, including:

- Encryption: End-to-end encryption for data in transit and at rest.

- Role-Based Access Control (RBAC): Fine-grained access to workspaces, notebooks, and data.

- Audit Logging: Tracks actions to ensure compliance.

- Compliance Standards: Adheres to SOC 2, HIPAA, GDPR, and more.

- Private Link Support: Ensures secure communication via private networking.

- Multi-Tenancy Isolation: Protects data in shared environments.

3. Can Databricks handle real-time data analytics?

Databricks can handle real-time analytics by integrating with tools like Apache Kafka, Event Hubs, or Pub/Sub for streaming data. Its Delta Live Tables feature allows real-time data processing with incremental updates, making it ideal for use cases like monitoring, fraud detection, and recommendation engines.

4. What support and resources are available for Databricks users?

- Documentation: Detailed guides, tutorials, and API references.

- Community Support: A large and active user community for knowledge sharing.

- Customer Support Plans: Tiers include Standard, Premium, and Enterprise support.

- Training and Certifications: Online and instructor-led courses for all skill levels.

- Partner Ecosystem: Access to Databricks-certified partners for custom solutions.

5. How does Databricks simplify the implementation of machine learning models?

- Integrating with MLflow for end-to-end model lifecycle management.

- Offering pre-built libraries for data preparation and feature engineering.

- Providing scalable compute resources for training and testing models.

- Supporting collaboration among data scientists and engineers through shared notebooks.

- Allowing deployment of models directly into production with minimal effort.

6. How does Databricks handle large-scale data collaboration?

- Shared Notebooks: Allows multiple users to edit, review, and run code together.

- Version Control: Tracks changes for reproducibility.

- Role-Based Access: Ensures secure, managed data sharing.

- Interactive Dashboards: Let teams visualize and share insights.

- Delta Sharing: A secure protocol for sharing live data across organizations.